Request for Product: Pipeline Replay

Everyone is focused on instrumenting API calls to LLM providers, but that misses the bigger picture.

I’ve been working on extractGPT, a tool powered by large language models (LLMs) that extracts structured data from web pages.

I recently wanted to switch the underlying model from OpenAI’s old GPT-3 model to the more affordable ChatGPT model. But first, I needed to make sure that the new model would perform just as well for my use case.

There are dozens[1] of startups building tools for instrumenting API calls to large-language models. They let you do useful things like seeing cost & latency, user feedback, evaluate different prompts, and collect examples for fine-tuning. But because they only instrument the API call, they are not good at answering the ultimate question I care about: if I change part of the pipeline, are users going to get better or worse results?

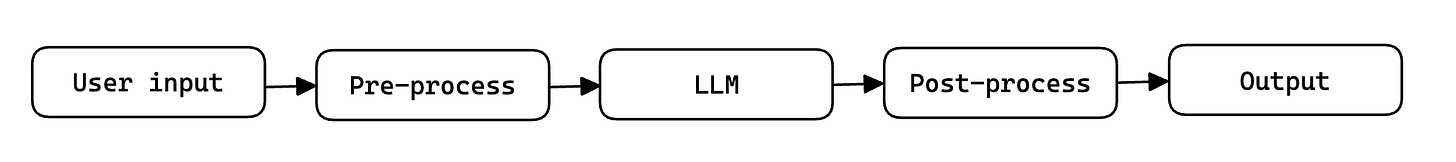

LLMs are great, but to build a useful production app, you often need to do a bunch of pre-processing before you call them, and post-processing on the results to get an acceptable output.

For example, in the case of extractGPT, here are some of the steps in the pipeline:

I’d love a tool that lets me instrument the end-to-end pipeline, then replay examples whenever I make a change at any step. Because the existing tools only instrument the LLM step, it’s hard to experiment with things like:

Smaller chunks, which you can run in parallel to speed up the pipeline.

Multi-prompt changes: you may be calling an LLM multiple times for each request, with the output of the first call being used as the input in subsequent calls. In these cases, you’ll often want to tweak all the prompts together.

End-to-end changes, like swapping out the model and some of the post-processing logic at the same time. Different prompts and models might need different kinds of post-proecssing to get reasonable results.

I have a hacky set up for doing end-to-end replays. It works like this:

Log every relevant parameter to a database when an extraction runs and a user provides feedback on it.

A simple Python script fetches a sample of examples (with a mix of positive and negative feedback), and re-runs the pipeline on that example.

The script outputs a comparison between the old output and new output. In my case, I care about a table diff to see if the code change causes the output to miss some expected rows, or add spurious rows, or change cell values.

If you’re working on a more robust solution to this problem, I’d love to use it! I’m @kasrak on Twitter.

—

[1] E.g. Promptlayer, Humanloop, Helicone, Promptable, Honeyhive, Vellum, Scale Spellbook